A (Practical) Framework for Quantifying Cyber Risk: Part 2

Introduction

In this article, I will demonstrate how you can use the FAIR methodology, which we introduced in Part 1.

Implementing FAIR: a Step-by-Step Process

Before beginning a FAIR analysis, make sure you have gathered the following:

Executive Sponsorship: Leadership buy-in is critical for resource allocation and organizational acceptance.

Subject Matter Experts (SMEs): Access to individuals with deep knowledge of assets, threats, and business impacts.

Historical Data: Internal incident logs, vulnerability assessments, and financial records.

External Benchmarks: Industry reports (e.g., Verizon DBIR, Ponemon Institute studies) for comparative data.

Tools: Spreadsheet software (Excel), statistical software (Python with NumPy/SciPy), or dedicated FAIR tools.

Step 1: Define Your Risk Scenario

A well-defined risk scenario is the foundation of any FAIR analysis. It should clearly articulate:

Asset: What is at risk? (e.g., customer database, payment processing system, intellectual property)

Threat: Who might act against the asset? (e.g., external hackers, insider threats, nation-state actors)

Threat Type: What action might they take? (e.g., ransomware attack, data exfiltration, DDoS)

Effect: What is the potential impact? (e.g., data breach, system downtime, regulatory violation)

Example Scenario:“Financial loss from a successful ransomware attack targeting the organization’s primary file server by an external cybercriminal group, resulting in system downtime, data recovery costs, and potential regulatory fines.”

Step 2: Estimate Loss Event Frequency (LEF)

As you may recall from my previous article, LEF represents the likelihood of a loss event occurring within a specified time frame (typically one year). You can estimate LEF directly or derive it from its components.

Option A: Direct LEF Estimation

If you have sufficient historical data or industry benchmarks, you can estimate LEF directly:

Gather Data: Review internal incident logs, threat intelligence reports, and industry studies.

Define PERT Parameters:

Minimum: The lowest credible number of events per year (e.g., 0)

Mode: The most likely number of events per year (e.g., 1)

Maximum: The highest credible number of events per year (e.g., 5)

Calibrate Estimates: Use calibrated SME judgment to refine estimates.

NOTE: There are several free, online sources when it comes to estimating how often a risk could manifest. See the Tools and Resources section at the end of this article for some ideas.

Option B: Derive LEF from TEF and Vulnerability

If direct estimation is not feasible, break down LEF into its components:

LEF = Threat Event Frequency (TEF) × Vulnerability

Estimate Threat Event Frequency (TEF):

How often does the threat community attempt to act against the asset?

Sources: Firewall logs, IDS/IPS alerts, threat intelligence feeds

PERT parameters: min, mode, max (e.g., 0, 2, 10 attempts per year)

Estimate Vulnerability:

What is the probability that a threat event becomes a loss event?

Factors: Control strength, threat capability, asset resistance

PERT parameters: min, mode, max (e.g., 0.05, 0.20, 0.50 probability)

NOTE: (If you are not familiar with PERT, here is a handy explanation.)

Step 3: Estimate Loss Magnitude (LM)

LM represents the probable financial impact of a single loss event. It is composed of primary and secondary losses.

Primary Loss (Direct Costs)

Primary loss includes the immediate, direct costs incurred from the event:

Productivity Loss:

Lost revenue during downtime

Cost of idle employees

Example: $10,000/hour × 24 hours = $240,000

Response Costs:

Incident response team fees

Forensic investigation

Legal counsel

Example: $50,000 - $200,000

Replacement Costs:

Hardware/software replacement

Data restoration

Example: $20,000 - $100,000

Total Primary Loss PERT Parameters:

Minimum: $50,000

Mode: $200,000

Maximum: $1,000,000

Secondary Loss (Indirect Costs)

As seen above, secondary loss includes the indirect costs that might arise from stakeholder reactions:

Fines and Judgements:

Regulatory penalties (e.g., GDPR, HIPAA)

Legal settlements

Example: $0 - $500,000

Reputation Damage:

Customer churn

Increased customer acquisition costs

Brand value erosion

Example: $0 - $1,000,000

Competitive Advantage:

Loss of intellectual property

Market share erosion

Example: $0 - $500,000

Secondary Loss Considerations:

Not all primary loss events trigger secondary losses

Estimate the probability that a secondary loss occurs (e.g., 30%)

Define PERT parameters for secondary loss magnitude when it does occur

After you sum the two losses (PLM and SLM), you should have enough ground to make a statement like: “If this risk is to materialize, we stand to lose at least X (MIN), more likely Y (ML), and, in the worst case, Z (MAX)”. It’s much better than saying it’s High or Medium. Agreed?

However, if this quantification is still too vague and you want to stress-test it, proceed with Step 4: Run a Monte Carlo simulation. If you feel your calculation is already fit for purpose, proceed directly to Step 5: Present Results to your Stakeholders.

Step 4: Run a Monte Carlo Simulation (Optional)

With LEF and LM parameters defined, we can do a Monte Carlo simulation to calculate the risk distribution (i.e., how likely this risk is to cost the organization if it materializes):

Set Simulation Parameters:

Number of iterations: 10,000 - 100,000 (more iterations = more precision)

Random seed: For reproducibility (optional)

Execute Simulation:

For each iteration, randomly sample from the PERT distributions for LEF and LM

Calculate ALE = LEF × L

Store the result

Aggregate Results:

Compile all ALE values into a distribution

Calculate key metrics (mean, median, percentiles)

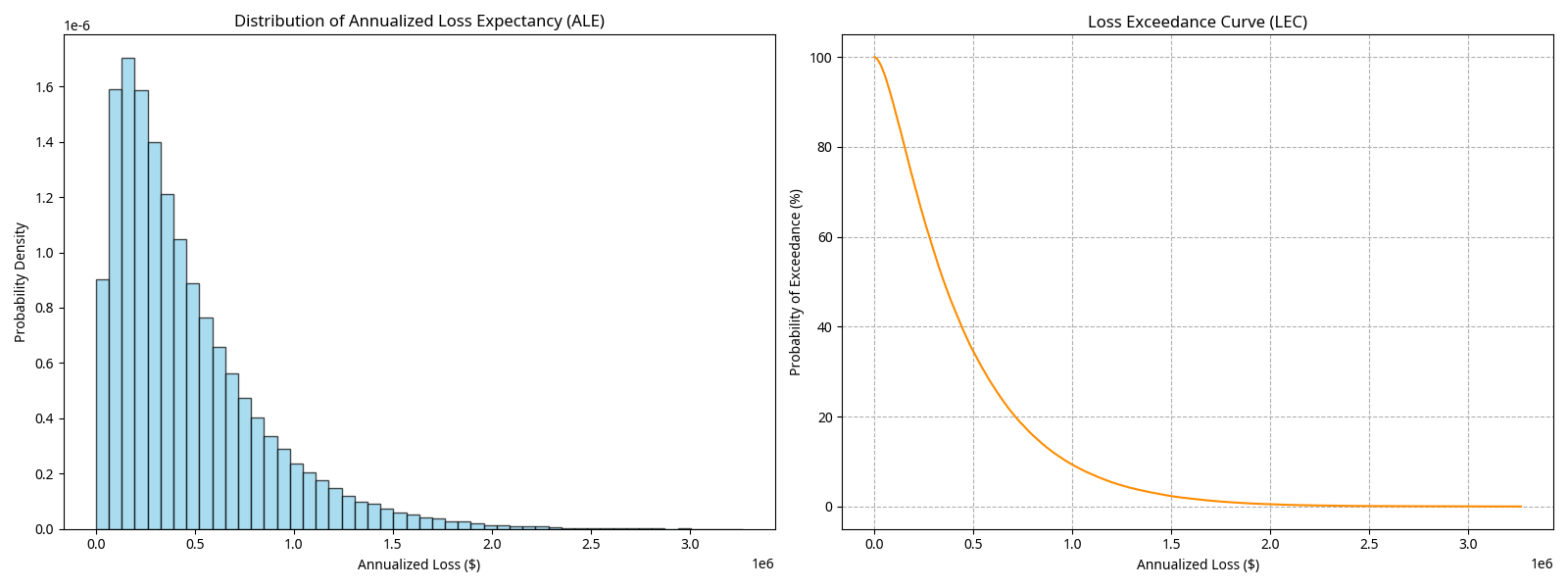

Outputs of a Montecarlo Simulation: ALE and LEC

Analyze and Interpret Results

The output of the simulation provides some actionable insights:

Mean ALE: The average expected annual loss. Use this for budgeting and resource allocation.

Median ALE: The middle value of the distribution, less influenced by extreme outliers.

95% Value at Risk (VaR): There is a 5% chance that losses will exceed this amount in a given year. This is a “1-in-20-year” event and is useful for setting risk tolerance thresholds.

Loss Exceedance Curve: Visualizes the probability of exceeding any given loss amount.

Example Interpretation:

Mean ALE: $459,529

95% VaR: $1,225,861

“On average, we expect to lose approximately $460,000 per year from this risk. However, there is a 5% chance that losses could exceed $1.2 million in a given year. If our risk tolerance is set at $1 million, we should consider additional mitigation measures.”

Microsoft Excel provides powerful Monte Carlo capabilities without requiring @RISK or other expensive add-ins, making it ideal for FAIR quantification work. In Part 3 of this article, I will show how to set up and run a Monte Carlo simulation in Excel.

Step 5: Present Results to your Stakeholders

It is now time to translate technical findings into business language:

Executive Summary: One-page overview with key metrics and recommendations.

Visual Aids: Use histograms and loss exceedance curves to illustrate risk.

Comparison to Risk Tolerance: Show how the risk compares to acceptable thresholds.

Mitigation Options: Present cost-benefit analysis of potential controls.

Decision Framework: Recommend acceptance, mitigation, transfer (insurance), or avoidance.

Common Pitfalls and Best Practices

Pitfalls to Avoid

Overly Broad Scenarios: Scenarios that are too vague (e.g., “cyber risk”) are not actionable. Be specific.

Anchoring Bias: SMEs may anchor on initial estimates. Use independent elicitation.

Ignoring Uncertainty: Single-point estimates ignore the inherent uncertainty in risk. Always use distributions.

Data Quality Issues: Garbage in, garbage out. Validate and document all data sources.

Lack of Documentation: Undocumented assumptions make the analysis indefensible. Record everything.

Best Practices

Start Small: Begin with a high-priority risk scenario to build experience and credibility.

Iterate: FAIR is not a one-time exercise. Regularly update analyses as new data emerges.

Transparency: Clearly document all assumptions, data sources, and methodologies.

Sensitivity Analysis: Test how changes in key assumptions affect the results.

Integrate with Frameworks: Utilize FAIR in conjunction with NIST, ISO 27001, or other frameworks for a comprehensive approach.

Executive Engagement: Regularly communicate results to leadership to maintain buy-in.

Tools and Resources

Tools

Excel/Google Sheets: Suitable for simple analyses and templates.

Python (NumPy, SciPy, Matplotlib): Powerful for custom simulations and visualizations.

R (mc2d, fitdistrplus): Statistical analysis and distribution fitting.

Commercial FAIR Tools: RiskLens, Safe Security, CyberSaint (CyberStrong) offer integrated platforms.

Resources

Verizon Data Breach Investigations Report (DBIR): The Verizon DBIR is one of the most comprehensive and widely cited sources for cyber incident frequency data. The 2025 edition analyzed over 22,000 security incidents and 12,195 confirmed data breaches across 139 countries. This data is invaluable for estimating Threat Event Frequency (TEF) in FAIR risk analyses.

ENISA Threat Landscape Report: The European Union Agency for Cybersecurity (ENISA) publishes an annual Threat Landscape report that provides European-focused threat frequency data. The 2025 edition analyzed 4,875 incidents from July 2024 to June 2025. The report is also explicitly mentioned as an authoritative external source for TEF in the FAIR Institute's Analyst's Guide to Cyber Risk Data Sources.

Cyentia Institute's Information Risk Insights Study (IRIS): The Cyentia IRIS report represents the "gold standard in evidence-based security analysis". It draws from Zywave's Cyber Loss Data, containing over 150,000 security incidents and associated losses spanning decades. The 2025 edition found that over 60% of Fortune 1000 companies experienced at least one public breach over the past decade, with one in four Fortune 1000 firms suffering a cyber loss event annually. IRIS provides statistically rigorous analysis with confidence intervals and peer-reviewed findings, making it excellent for benchmarking TEF estimates.

Industry-Specific ISACs (Information Sharing and Analysis Centers): ISACs are sector-specific organizations that provide centralized resources for gathering and sharing cyber threat intelligence among members. There are currently 28 ISACs covering sectors including financial services (FS-ISAC), healthcare (H-ISAC), information technology (IT-ISAC), and others. These organizations offer real-time threat intelligence, early warning alerts, and sector-specific incident frequency data that reflects the unique risks of your industry. ISACs provide both unidirectional intelligence distribution and bidirectional sharing, where members contribute threat data, creating actionable intelligence on threat event frequency tailored to your specific sector.

Cyber Insurance Claims Data and Industry Reports: Cyber insurance providers like Coalition, Allianz Commercial, Munich Re, and Aon publish annual reports based on actual claims data, providing real-world frequency statistics. Coalition's 2024 analysis showed global cyber insurance claims frequency at 1.48%, with Business Email Compromise and Funds Transfer Fraud accounting for 60% of all claims and ransomware representing 20%. Allianz Commercial reported around 300 cyber claims in the first half of 2025, with ransomware accounting for 60% of large claim values. These sources are particularly valuable because they represent actual loss events rather than just attempted attacks, making them highly relevant for estimating both Loss Event Frequency and Threat Event Frequency.